Talking Past Each Other

AI Bias and the Cost to Society

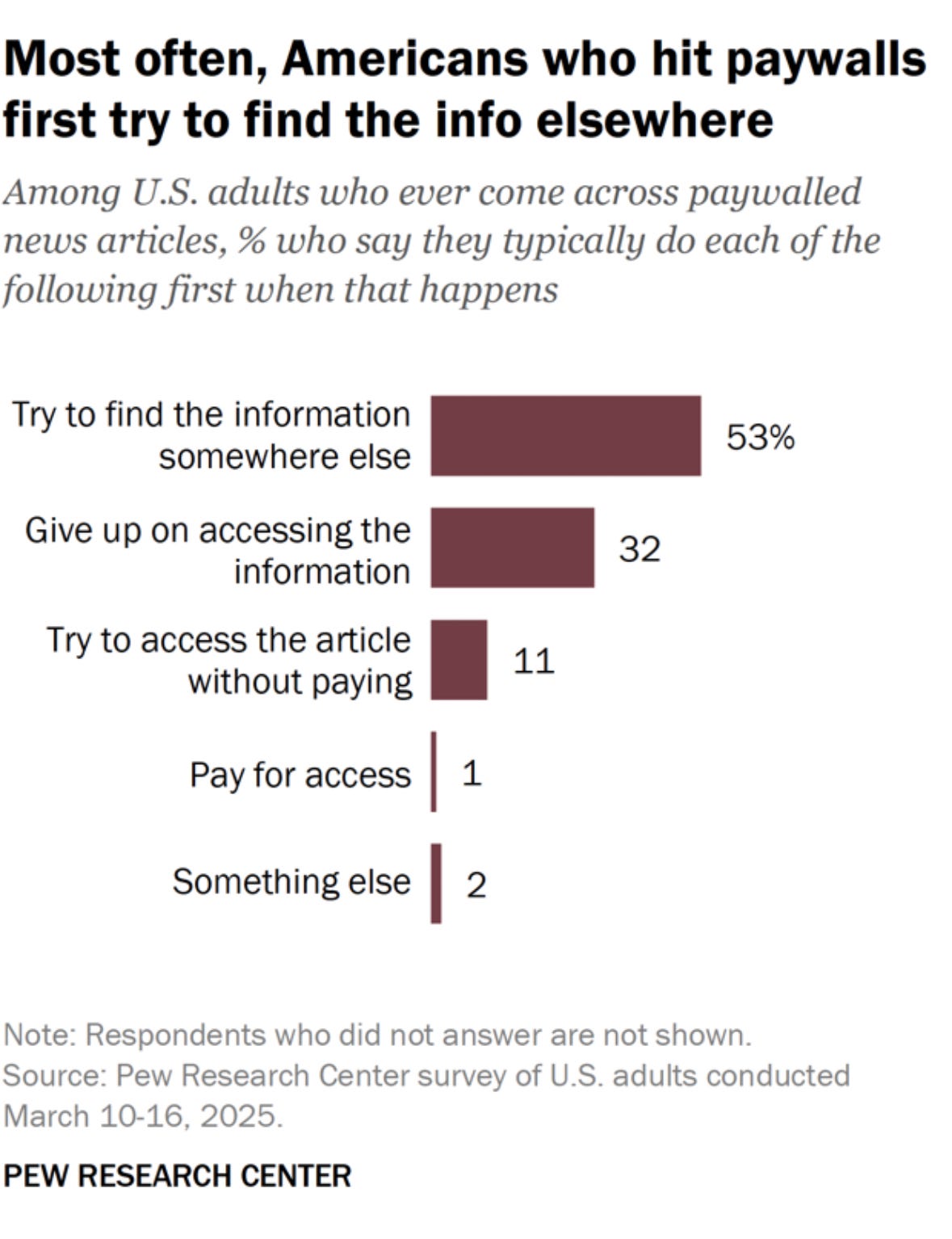

I hate when a friend drops a link and a paywall pops up. In the three-second sigh before I swipe away, I join the 53% of Americans who look elsewhere when a website charges. Only 1% pony up. Those tiny aborted clicks add up to stalled conversations and, increasingly, feed the thin data diet AI models live on.

The Knowledge Economy and Rising Paywalls

In today’s “knowledge economy,” high-quality information is increasingly treated as a premium commodity. Mainstream news outlets and content creators, facing declining ad revenues, have erected paywalls or other barriers around their content. This trend means that much of the well-vetted, professional knowledge online is no longer freely accessible. A Pew Research Center survey found that 74% of Americans encounter paywalls at least sometimes when seeking news, yet 83% have not paid for any news in the past year. In practice, when readers hit these restrictions, very few pull out a credit card. Instead, most will simply navigate away in search of free information.

For publishers, this is an economic survival strategy. If AI systems scrape their work without compensation or attribution, the “old contract” of the internet (free content subsidized by ads and traffic) breaks down. Understandably, many mainstream outlets feel compelled to lock down their articles to protect revenue and intellectual property. Industry leaders like The New York Times have even pursued legal action, suing OpenAI for unauthorized data scraping. In short, quality journalism and expert knowledge are increasingly tucked behind paywalls or bot-blocking shields. The unfortunate side effect is that the open web is left with fewer authoritative voices, as free knowledge resources diminish without new economic models to support them.

Fringe Voices Fill the Free Content Void

As mainstream sources retreat behind paywalls and block AI crawlers, a different set of voices rushes in to fill the freely accessible space. Many alternative, partisan, or “fringe” outlets choose to remain wide open. Notably, a recent analysis by Originality AI revealed that nearly 90% of top left or center news sites now block web scrapers like OpenAI’s GPTBot, while none of the surveyed major right-wing sites did. Prominent conservative outlets such as Fox News, Breitbart, and NewsMax have left their content accessible to AI, in stark contrast to their more liberal counterparts. When asked why, Originality AI founder and CEO Jon Gillham mused that it might be a deliberate strategy: “If the entire left-leaning side is blocking, you could say, come on over here and eat up all of our right-leaning content.” While ultra-conservative blogs gain visibility because they’re free, hyper-partisan left outlets like Occupy Democrats and The Grayzone also circulate unvetted claims at no cost. Both ends of the spectrum exploit the open web’s reach leaving rigorous reporting behind paywalls. In other words, fringe and partisan publishers see an opportunity to amplify their influence by feeding unrestricted content into the new generation of AI models.

Economic incentives also play a role. Free access aligns with the business models of many alternative outlets that rely on maximizing reach (for ad revenue, donations, or political impact) rather than subscription income. Moreover, there’s a self-reinforcing audience dynamic: Demographics less inclined to pay for news gravitate toward these free sources, and the outlets in turn keep content ungated to maintain that audience. Pew Research finds, for instance, that highly educated Americans and Democrats are far more likely to pay for news than those with less education or Republicans. This suggests many right-leaning consumers stick to freely available news, a demand that right-wing outlets are happy to supply. In effect, the public sphere of information is splitting into two tiers: one of paywalled, curated content for those who can afford it, and another of open, often unfiltered content that skews toward sensationalism or ideological extremes.

AI Models and the Risk of a Rightward Tilt

These trends raise a critical question: What happens when AI language models learn predominantly from the free-tier content? Large language models are typically trained on vast swaths of the internet. If the most reputable mainstream knowledge is locked away or labeled “off-limits” to crawlers, while less regulated sources remain abundant, the training data may become imbalanced. Over time, the concern is that AI models might develop an intrinsic slant simply because the accessible corpus tilts in a certain direction. The scenario is no longer hypothetical. AI observers note that current models already reflect their diet of data: “AI models reflect the biases of their training data,” explains Originality AI CEO Jon Gillham, meaning that if one ideology disproportionately populates the training set, the model’s outputs could shift accordingly.

We are now seeing early evidence that supports this possibility. For example, a 2025 study by Chinese researchers found that OpenAI’s ChatGPT has begun drifting toward more right-leaning responses over time, even if it started from a neutral or slightly left-libertarian stance. The study’s authors described a “significant rightward tilt” emerging in how GPT-3.5 and GPT-4 answered political compass questions when tested repeatedly. It’s difficult to pin down all the causes of this shift. It could be due to updated training data, fine-tuning choices, or alignment tweaks, but the observation is noteworthy. It hints that as the web’s content landscape evolves, AI systems might gradually mirror the louder voices in the open internet, unless developers actively counterbalance their training.

To be sure, AI companies are not oblivious to this risk. Many employ human reviewers and reinforcement learning from human feedback (RLHF) to correct for harmful or unbalanced outputs. OpenAI and others have stated that they use “broad collections” of data and strive for neutrality, downplaying the impact of any single source. However, if the underlying pool of freely available data becomes systematically skewed, there is only so much post-training alignment can fix. An AI’s knowledge base is only as good as its training corpus. A model trained on a web where mainstream fact-checked journalism is scarce but websites with minimal editorial oversight or clear ideological slant are plentiful will inevitably reflect that imbalance in its default outputs. My worry is that future models might tilt rightward (or toward whatever bias dominates free content) by default, not due to a deliberate agenda but as a side effect of the content ecosystem we’ve built.

What Are We Saying as a Society by Allowing This?

Stepping back, a profound societal question emerges: What does it say about our values and priorities when reliable knowledge is paywalled, while fringe narratives run free? By allowing the commodification of mainstream information without ensuring broad access, we implicitly state that quality knowledge is a privilege, not a public good. The outcome of this choice is a kind of informational partitioning of society. Those who can pay or who actively seek out vetted sources get one version of reality, whereas the broader public, along with the AI systems absorbing our online content, get another version. A version more susceptible to bias, sensationalism, or misinformation.

This dynamic suggests several uncomfortable things about us as a society:

Profit Over Public Knowledge: We have prioritized the monetization of content over the ideal of a well-informed public sphere. It is understandable why media companies enforce paywalls (to survive financially in the digital age), yet the net effect is that truth and expertise often come with a price tag. Meanwhile, dubious information remains free and omnipresent, gaining an outsized voice. Essentially, we’ve left truth on the market shelf, and everything else on the bargain rack.

Knowledge Inequality: We appear willing to tolerate a widening gap in information access. Just as income inequality separates what different socio-economic groups can afford, information inequality now separates what different groups know. The fact that only 1 in 6 Americans pays for any news, and that this minority is skewed toward the educated and affluent, hints at a future where educated elites follow high-quality journalism while others subsist on clickbait and partisan spin. When AI is added to the mix, the risk is that the “collective intelligence” we delegate to machines will be built on the lowest common denominator of content.

Complacency Toward Extremism: By doing little to keep reputable information freely accessible (for example, through public funding, open-access initiatives, or creative licensing), we’ve effectively ceded the open-information arena to more extreme voices. Society’s tacit allowance of this state of affairs sends a signal: we are comfortable if the loudest freely available voices shape the narrative. If future AIs tilt rightward or toward other dominant biases in free data, it will be a reflection of our collective inaction in preserving a balanced knowledge commons.

In allowing this divide, we are witnessing a turning point in how information flows in our democracy. The “knowledge economy” model, where information is a product to be bought and sold, is colliding with the ideal of the internet as a democratizer of knowledge. The collision’s fallout is all around us: polarized public opinion, mistrust in mainstream expertise, and now the prospect of AI that might echo and amplify those imbalances.

So, what are we saying as a society? In essence, we are saying that we value knowledge, but we’re willing to let market forces and ideological opportunists decide who gets to access it. We’re saying that it’s acceptable for our new intelligent tools to potentially inherit the skewed landscape we’ve created, rather than ensuring they’re built on a foundation of balanced and factual information. This unspoken decision may come back to haunt us. If we want AI systems, and the society using them, to be grounded in truth and fairness, we might need to collectively rethink how we treat knowledge itself: as a commons to nurture, not just a commodity to hoard. Only by addressing the underlying economics and accessibility of information can we hope to prevent our future models, and by extension our public discourse, from tilting by default toward the loudest free voices in the room.

Connection Blueprint

But I’m not all doom and gloom. Here are a couple of blueprints I think we can embrace to build and sustain connections:

Freemium fact-checks. Partner with paywalled outlets to release short, ad-supported summaries so truth still travels.

Bias “nutrition labels.” Push model vendors to show a simple pie chart or breakdown of sources in every major release.

Protocol-level royalties and open access APIs that pay publishers when autonomous agents pull facts.

Collective-rights APIs. Let smaller publishers pool content and negotiate fair AI-training fees. Think ASCAP for journalism.

Data-diversity funds. VCs and foundations chip in to keep reputable archives open and licensable for model training.

Personal practice. Quote, link, and credit paywalled work when you discuss it. This signals demand for sustainable access.

For my part, I know that I can do more. I’ll always keep this kind of writing free. I’ll continue to put myself in conversation with different kinds of people. I’m going to try to listen more. I invite you to do the same. Share this essay and start a conversation with your friends. Comment here, even if you don’t fully agree with me. I promise you it’ll go further than you think. Knowledge is only as common as the doors we leave unlatched. What will you prop open this week?

This was such a lovely read! My inner academic is grateful for the well-researched (and carefully linked) arguments, and I really hope you continue to lean into this "edited voice memo" style and write more about culture and international policy and tech. I'm excited to read more! Keep writing, friend <3